Salman's Blog

Sallyman

Oct 14, 2022, 5 days ago

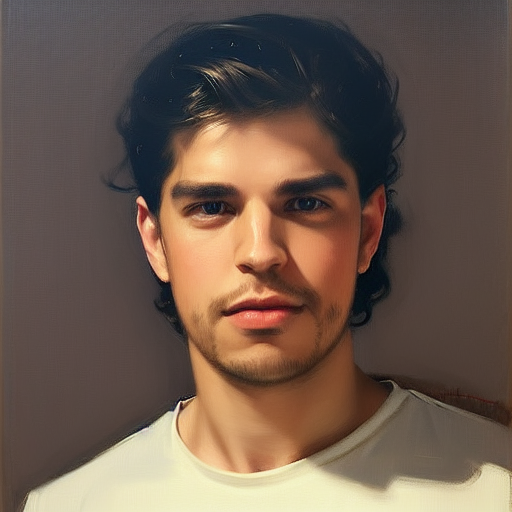

Stable Diffusion

Stable diffusion generates images based on text descriptions known as prompts.

During the training process of this model, statistical associations were made between images and related words, making a much smaller representation of key information about each image and storing them as weights.

These weights are quantified values that essentially represent what the model/AI has learned.

When stable diffusion analyses and compresses images into weight form (learning them so to speak), they are transformed into a latent space representation. Existing as a transformation of data that can be turned into images once decoded.

Sallyman

Salman here making stuff